Category Archives: Windows

Windows based articles and tools

The Azure Container Service

The Azure Container Service (ACS) is a cloud-based container deployment and management service that supports popular open-source tools and technologies for container and container orchestration. ACS allows you to run containers at scale in production and manages the underlying infrastructure for you by configuring the appropriate VMs and clusters for you. ACS is orchestrator-agnostic and allows you to use the container orchestration solution that best suits your needs. Learn how to use ACS to scale and orchestrate applications using DC/OS, Docker Swarm, or Kubernetes.

Azure Container Instances let you run a container in Azure without managing virtual machines and without a higher-level service.

How to build container instances

Cluster share volume

Cluster Shared Volumes (CSV) is a feature of Failover Clustering first introduced in Windows Server 2008 R2 for use with the Hyper-V role. A Cluster Shared Volume is a shared disk containing an NTFS or ReFS (ReFS: Windows Server 2012 R2 or newer) volume that is made accessible for read and write operations by all nodes within a Windows Server Failover Cluster.

Problem

Backup jobs fail due to Microsoft Volume Shadow Copy Service (VSS) related errors indicating either a lack of disk space or I/O that is too high to maintain the shadow copies.

vssadmin list shadowstorage

vssadmin list shadows

vssadmin resize shadowstorage /for=C: /on=C: /maxsize=50GB

vssadmin add shadowstorage /for=<drive being backed up> /on=<drive to store the shadow copy> /maxsize=<percentage of disk space to allow to be used>

vssadmin add shadowstorage /for=d: /on=c: /maxsize=90%

References to CSV as FC cluster-quorum

Storage Space Direct using as quorum in FC(2019 Failover cluster)

Reset Terminal license on Windows 2008, 2008 R2 and 2012

This document will help you to temporary fix the RDP license issue while login into the servers.

NLB 2008

Network load balancing (commonly referred to as dual-WAN routing or multihoming) is the ability to

balance traffic across two WAN links without using complex routing protocols like BGP.

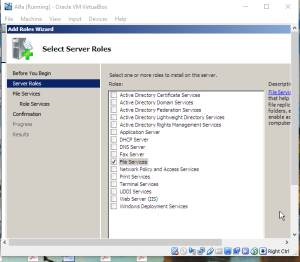

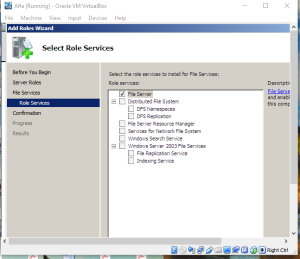

DFS 2012

The Distributed File System (DFS) technologies offer wide area network (WAN)-friendly replication as well as simplified, highly available access to geographically dispersed files.The Distributed File System role service consists of two child role services:

• DFS Namespaces

• DFS Replication

DFS offers the following benefits:

Shared folders on a network appear in one hierarchy of folders created by a DFS Root with links. This simplifies user access.

Fault tolerance is an option by replicating shared folders. Uses the Microsoft File Replication Service (FRS).

Load balancing can be performed by distributing folder access across several servers.

There are two DFS models as follows:

Standalone

No Active Directory implementation

Can implement load balancing, but replication of shares is manual

DFS Root cannot be replicated

DFS accessed by \\Server_Name.Domain_Name\DFS_Root_Name

Choose a stand-alone namespace if any of the following conditions apply to your environment:

• Your organization does not use Active Directory Domain Services (AD DS).

• You need to create a single namespace with more than 5,000 DFS folders in a domain that does not meet the requirements for a domain-based namespace (Windows Server 2008 mode).

• You want to increase the availability of the namespace by using a failover cluster.

Domain-based

Available only to members of a domain

Can implement fault tolerance by Root and Link replication and load balancing, and replication of links and root is automatic

DFS accessed by \\Domain_Name\DFS_Root_Name

Choose a domain-based namespace if any of the following conditions apply to your environment:

• You want to ensure the availability of the namespace by using multiple namespace servers.

• You want to hide the name of the namespace server from users. Choosing a domain-based namespace makes it easier to replace the namespace server or migrate the namespace to another server.

DFS Topology:

The DFS root (a table of contents)

Main container that holds links to shared folders

Folders from all domain computers appear as if they reside in one main folder

DFS links (pointers to shares)

Designated access path between the DFS root and shared folders

Replica sets (targets (duplicated shares))

Set of shared folders that is replicated to one or more servers in a domain

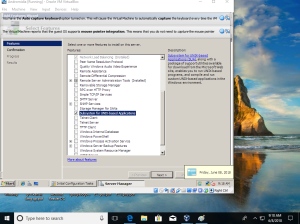

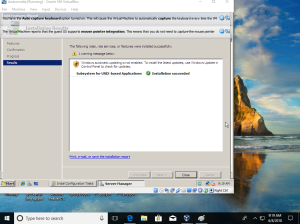

Services for UNIX discontinued

Windows Services for UNIX (SFU) is a discontinued software package produced by Microsoft which provided a Unix environment on Windows NT and some of its immediate successor operating-systems.

SFU 1.0 and 2.0 used the MKS Toolkit; starting with SFU 3.0, SFU included the Interix subsystem, which was acquired by Microsoft in 1999 from US-based Softway Systems as part of an asset acquisition. SFU 3.5 was the last release and was available as a free download from Microsoft. Windows Server 2003 R2 included most of the former SFU components (on Disk 2), naming the Interix subsystem component Subsystem for UNIX-based Applications (SUA). In Windows Server 2008 and high-end versions of both Windows Vista and Windows 7 (Enterprise and Ultimate), a minimal Interix SUA was included, but most of the other SFU utilities had to be downloaded separately from Microsoft’s web site.

SFU 1 Releases

Telnet server/Client

UNIX Utilities (from MKS)

Server for NFS/Client for NFS

Server for NFS Authentication

Windows NT to UNIX password-synchronization

SFU 2 Releases added

Gateway for NFS (NFSGateway)

Server for PCNFS (Pcnfsd)

User Name Mapping (Mapsvc)

Server for NIS (NIS)

ActiveState ActivePerl (Perl)

Cron service (CronSvc)

Windows Remote Shell Service-Rsh service (RshSvc)

SFU 3 Releases contains

Base Utilities for Interix (BaseUtils; including X11R5 utilities)

UNIX Perl for Interix (UNIXPerl)

Interix SDK (InterixSDK; including headers and libraries for development and a wrapper for Visual Studio compiler)

GNU Utilities for Interix (GNUUtils, about 9 utilities in total)

GNU SDK for Interix (GNUSDK; including gcc and g++)

SFU 3.5 Releases contains

This was the final release of SFU and the only release to be distributed free of charge. It was released for Windows 2000, Windows XP Professional, and Windows Server 2003 (original release only) on x86 platforms.It included Interix subsystem release 3.5 (build version 8.0) adding internationalization support (at least for the English version which did not have such until now) and POSIX threading. This release could only be installed to an NTFS file system (earlier versions supported FAT; this was for improved file-security requirements in Interix 3.5). The following UNIX versions were supported for NFS components: Solaris 7 and 8, Red Hat Linux 8.0, AIX 5L 5.2, and HP-UX 11i.

Note:MKS Toolkit is a software package produced and maintained by PTC Inc. that provides a Unix-like environment for scripting, connectivity and porting Unix and Linux software to both 32- and 64-bit Microsoft Windows systems. It was originally created for MS-DOS, and OS/2 versions were released up to version 4.4. Several levels of each version, such as MKS Toolkit for Developers, Power Users, Enterprise Developers, Interoperability and so on are available, with the Enterprise Developer being the most complete Before PTC, MKS Toolkit was owned by MKS Inc. In 1999, MKS acquired a company based in Fairfax, Virginia, USA called Datafocus Inc. The Datafocus product NuTCRACKER had included the MKS Toolkit since 1994 as part of its Unix compatibility technology. The MKS Toolkit was also licensed by Microsoft for the first two versions of their Windows Services for Unix, but later dropped in favor of Interix after Microsoft purchased the latter company.

Service for NFS

Services for Network File System (NFS) provides a file-sharing solution for enterprises that have a mixed Windows and UNIX environment. Services for NFS enables users to transfer files between computers running the Windows Server 2008 operating system and UNIX-based computers using the NFS protocol.

New in Services for NFS

Active Directory Lookup. The Identity Management for UNIX Active Directory schema extension includes UNIX user identifier (UID) and group identifier (GID) fields. This enables Server for NFS and Client for NFS to look up Windows-to-UNIX user account mappings directly from Active Directory Domain Services. Identity Management for UNIX simplifies Windows-to-UNIX user account mapping management in Active Directory Domain Services.

64-bit support. Services for NFS components can be installed on all editions of Windows Server 2008, including 64-bit editions.

Enhanced server performance. Services for NFS includes a file filter driver, which significantly reduces common server file access latencies.

Unix special device support. Services for NFS supports UNIX special devices (mknod).

Enhanced Unix support. Services for NFS supports the following versions of UNIX: Sun Microsystems Solaris version 9, Red Hat Linux version 9, IBM AIX version 5L 5.2, and Hewlett Packard HP-UX version 11i.

Services for NFS components

Services for NFS includes the following components:

Server for NFS. Normally, a UNIX-based computer cannot access files on a Windows-based computer. A computer running Windows Server 2008 and Server for NFS, however, can act as a file server for both Windows-based and UNIX-based computers.

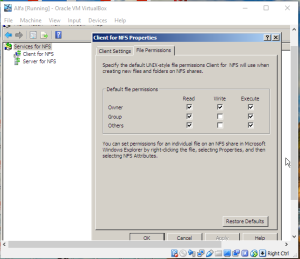

Client for NFS. Normally, a Windows-based computer cannot access files on a UNIX-based computer. A computer running Windows Server 2008 and Client for NFS, however, can access files stored on a UNIX-based NFS server.

Command-line tools.Services for NFS provides a Microsoft Management Console (MMC) snap-in for administration, as well as several command-line tools.

mapadmin-Administers User Name Mapping.

mount-Mounts NFS shared network resources.

nfsadmin-Manages Server for NFS and Client for NFS.

nfsshare-Controls NFS shared resources.

nfsstat-Displays or resets counts of calls made to Server for NFS.

showmount-Displays mounted file systems exported by Server for NFS.

umount-Removes NFS-mounted drives.

To open ports in Windows Firewall

On a computer running the User Name Mapping service or Server for NFS, click Start, click Run, type firewall.cpl, and then click OK.

Click the Exceptions tab, and then click Add Port.

In Name, type the name of a port to open, as listed in the following table.

In Port number, type the corresponding port number.

Select TCP or UDP and click OK.

Repeat steps 2 through 5 for each port to open, and then click OK when finished.

Services for NFS component

Port to open Protocol Port

User Name Mapping and Server for NFS Portmapper TCP, UDP 111

Server for NFS Network Status Manager TCP, UDP 1039

Server for NFS Network Lock Manager TCP, UDP 1047

Server for NFS NFS Mount TCP, UDP 1048

Server for NFS Network File System TCP, UDP 2049

https://docs.microsoft.com/en-us/previous-versions/windows/it-pro/windows-server-2012-R2-and-2012/jj574143(v=ws.11)

https://www.howtoforge.com/nfs-server-on-ubuntu-14.10

https://www.digitalocean.com/community/tutorials/how-to-set-up-an-nfs-mount-on-ubuntu-14-04

https://help.ubuntu.com/community/SettingUpNFSHowTo

https://help.ubuntu.com/lts/serverguide/network-file-system.html.en

Storage Space Direct using as quorum in FC(2019 Failover cluster)

Storage Spaces Direct uses industry-standard servers with local-attached drives to create highly available, highly scalable software-defined storage at a fraction of the cost of traditional SAN or NAS arrays. Its converged or hyper-converged architecture radically simplifies procurement and deployment, while features such as caching, storage tiers, and erasure coding, together with the latest hardware innovations such as RDMA networking and NVMe drives, deliver unrivaled efficiency and performance.

Storage Space Direct using as quorum in 2019

What’s new in Windows Server 2019

•Cluster sets

Cluster sets enable you to increase the number of servers in a single software-defined datacenter (SDDC) solution beyond the current limits of a cluster. This is accomplished by grouping multiple clusters into a cluster set–a loosely-coupled grouping of multiple failover clusters: compute, storage and hyper-converged.With cluster sets, you can move online virtual machines (live migrate) between clusters within the cluster set.

•Azure-aware clusters

Failover clusters now automatically detect when they’re running in Azure IaaS virtual machines and optimize the configuration to provide proactive failover and logging of Azure planned maintenance events to achieve the highest levels of availability. Deployment is also simplified by removing the need to configure the load balancer with Dynamic Network Name for cluster name.

•Cross-domain cluster migration

Failover Clusters can now dynamically move from one Active Directory domain to another, simplifying domain consolidation and allowing clusters to be created by hardware partners and joined to the customer’s domain later.

•USB witness

You can now use a simple USB drive attached to a network switch as a witness in determining quorum for a cluster. This extends the File Share Witness to support any SMB2-compliant device.

•Cluster infrastructure improvements

The CSV cache is now enabled by default to boost virtual machine performance. MSDTC now supports Cluster Shared Volumes, to allow deploying MSDTC workloads on Storage Spaces Direct such as with SQL Server. Enhanced logic to detect partitioned nodes with self-healing to return nodes to cluster membership. Enhanced cluster network route detection and self-healing.

•Cluster Aware Updating supports Storage Spaces Direct

Cluster Aware Updating (CAU) is now integrated and aware of Storage Spaces Direct, validating and ensuring data resynchronization completes on each node. Cluster Aware Updating inspects updates to intelligently restart only if necessary. This enables orchestrating restarts of all servers in the cluster for planned maintenance.

•File share witness enhancementsWe enabled the use of a file share witness in the following scenarios:

◦Absent or extremely poor Internet access because of a remote location, preventing the use of a cloud witness.

◦Lack of shared drives for a disk witness. This could be a Storage Spaces Direct hyperconverged configuration, a SQL Server Always On Availability Groups (AG), or an * Exchange Database Availability Group (DAG), none of which use shared disks.

◦Lack of a domain controller connection due to the cluster being behind a DMZ.

◦A workgroup or cross-domain cluster for which there is no Active Directory cluster name object (CNO). Find out more about these enhancements in the following post in Server & Management Blogs: Failover Cluster File Share Witness and DFS.

We now also explicitly block the use of a DFS Namespaces share as a location. Adding a file share witness to a DFS share can cause stability issues for your cluster, and this configuration has never been supported. We added logic to detect if a share uses DFS Namespaces, and if DFS Namespaces is detected, Failover Cluster Manager blocks creation of the witness and displays an error message about not being supported.

•Cluster hardening

Intra-cluster communication over Server Message Block (SMB) for Cluster Shared Volumes and Storage Spaces Direct now leverages certificates to provide the most secure platform. This allows Failover Clusters to operate with no dependencies on NTLM and enable security baselines.

•Failover Cluster no longer uses NTLM authentication

Failover Clusters no longer use NTLM authentication. Instead Kerberos and certificate-based authentication is used exclusively. There are no changes required by the user, or deployment tools, to take advantage of this security enhancement. It also allows failover clusters to be deployed in environments where NTLM has been disabled.

2016 Failover cluster using Azure blob as a cluster quorum

Quorum is designed to prevent split-brain scenarios which can happen when there is a partition in the network and subsets of nodes cannot communicate with each other. This can cause both subsets of nodes to try to own the workload and write to the same disk which can lead to numerous problems. However, this is prevented with Failover Clustering’s concept of quorum which forces only one of these groups of nodes to continue running, so only one of these groups will stay online.

Quorum witness as a cloud storage:

Cloud Witness is a new type of Failover Cluster quorum witness that leverages Microsoft Azure as the arbitration point. It uses Azure Blob Storage to read/write a blob file which is then used as an arbitration point in case of split-brain resolution.

it is possible for a cluster to span more than 2 datacenters. Also, each datacenter can have more than 2 nodes.A typical cluster quorum configuration in this setup (automatic failover SLA) gives each node a vote. One extra vote is given to the quorum witness to allow cluster to keep running even if either one of the datacenter experiences a power outage. The math is simple – there are 5 total votes and you need 3 votes for the cluster to keep it running.